Lesson 01 : Introduction

Too often, technical documentation makes too many assumptions about prior knowledge of terminology and standards for beginners to comfortably get started. So, to kick off this learning series, let’s go over some of the terminology and concepts we will be using when learning about SQL Server.

NOTE: Even if you are an experienced database professional or programmer, I encourage you to review this article anyway because identical terminology is often used across different RDBMS with not identical meaning.

Microsoft SQL Server

Relational Database Management System (RDBMS)

SQL Server is one of many Relational Database Management Systems on the market such as Oracle, PostgreSQL, Azure, Azure SQL Database, MySQL, etc. The Relational Database Management System manages all of the databases, data, transactional integrity, security, user access, etc. Other data storage and processing models have become increasingly popular over the years but RDBMS are still widely used for data warehouse projects, business intelligence and more.

SQL Server Business Intelligence (BI)

SQL Server Integration Services (SSIS)

Integration Services at its core is used to perform ETL for a data warehouse. ETL is an industry term that stands for Enhance, Transform, Load. Basically moving data between one database and another. The databases don’t need to be on the same platform either, they can be SQL Server databases, flat files or other database platforms such as Oracle, DB2, Access, Sybase, PostgreSQL, cloud, etc.

SQL Server Reporting Services (SSRS)

Reporting Services provides a set of on-premises tools and services that create, deploy, and manage mobile and paginated reports from Microsoft SQL Server. It is not uncommon for SSRS to be installed on a different machine from the SQL Server Database Engine instance it services.

SQL Server Analysis Services (SSAS)

A typical implementation workflow includes installing a SQL Server Analysis Services instance, creating a tabular or multidimensional data model, deploying the model as a database to a server instance, processing the database to load it with data, and then assigning permissions to allow data access. When ready to go, the data model can be accessed by any client application supporting Analysis Services as a data source.

Power BI

Power BI is a business analytics service. It aims to provide interactive visualizations and business intelligence capabilities with an interface simple enough for end users (Those who aren’t developers or DBAs) to create their own reports and dashboards.

NOTE: I think it is important to mention the SQL BI products now so that you can begin to understand how they fit into the overall eco system. However, this learning series will focus on the SQL Server Database Engine.

SQL Server Database Engine

Microsoft SQL Server originally created as a variant of Sybase SQL Server for IBM OS/2 and released in 1989. Since then there have been numerous releases but as of this writing the most recent (and still actively supported) versions are SQL Server 2012, 2014, 2016, 2017, and 2019.

The product names for SQL Server are denoted by the year of their release. The major version is often referenced in code and configuration metadata as a two to three digit number following this scheme:

| Product Name | Major Version | RTM Build Number |

| SQL Server 2019 | 150 | 15.0.2000.5 |

| SQL Server 2017 | 140 | 14.0.1000.169 |

| SQL Server 2016 | 130 | 13.00.1601.5 |

| SQL Server 2014 | 120 | 12.00.2000.8 |

| SQL Server 2012 | 110 | 11.00.2100.60 |

| SQL Server 2008 R2 | 100 | 10.50.1600.1 |

| SQL Server 2008 | 100 | 10.00.1600.22 |

| SQL Server 2005 | 90 | 9.00.1399.06 |

| SQL Server 2000 | 80 | 8.00.194 |

As a beginner the term “SQL Server” may seem confusing because it is often used to mean different things in different contexts including: the physical hardware server, the application, or the database engine. To clarify, when I reference SQL Server in my posts I am referencing the database engine. If I am referencing the software product I will use the full product name which is “Microsoft SQL Server”. If I am referring to the machine which hosts a SQL Server Instance, I will say some variation of “SQL Machine”, “SQL Node”, “SQL Host”, “SQL VM”, “SQL Box”, ect.

On Premises vs. Cloud

NOTE: For this learning series I will be focusing mostly on Microsoft SQL Server On Premises.

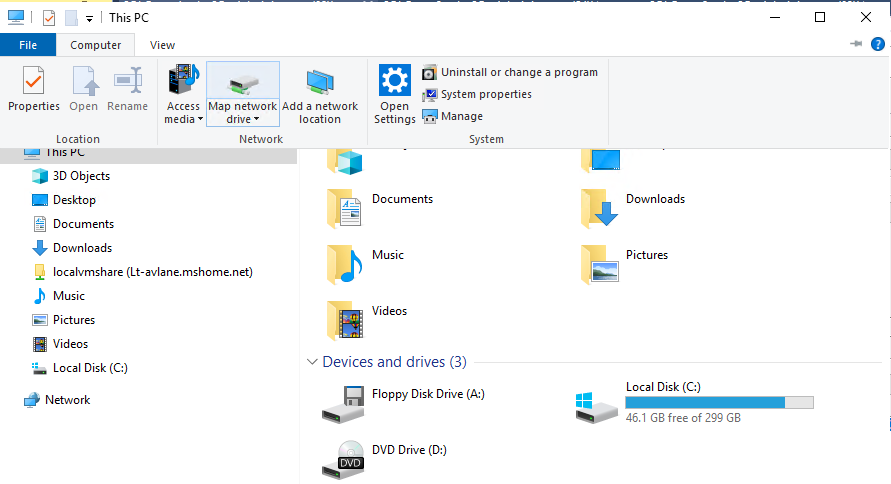

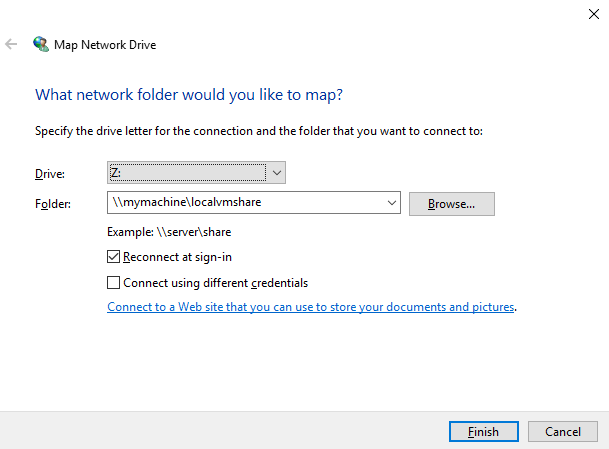

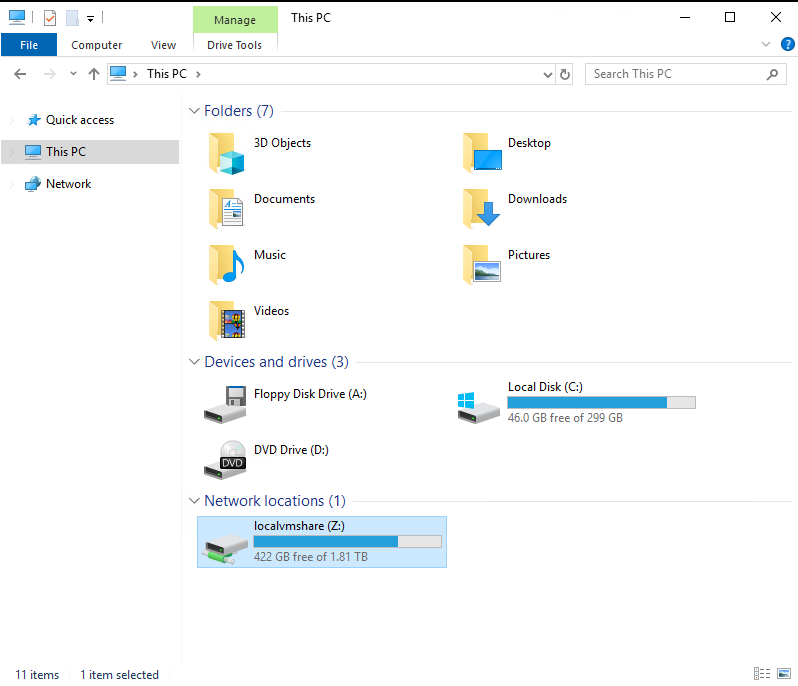

On Premises

Microsoft SQL Server was originally and still is developed as an On Premises (abbreviated as On Prem) software product meaning that it is installed on hardware owned and managed by you or your organization on a physical or virtual machine with an operating system (Windows or Linux).

Cloud: Azure SQL Product Family

With the rise of the enterprise cloud, Microsoft began offering different flavors of Microsoft SQL Server in Azure. Each has its own advantages/disadvantage and level of tradeoff between administration/management responsibilities and freedom/flexibility. These offerings include:

- SQL Server on Azure Virtual Machines*

- Azure SQL Managed Instance

- Azure SQL Database

- Azure SQL Edge

NOTE: While hosted in the cloud, SQL Server on Azure VMs is the same On Prem Microsoft SQL Server product, just installed on a virtual machine running in Azure instead of on your own machine.

SQL Server Instance

A SQL Server instance is a single installation of the SQL Server software. This instance encapsulates all the other database system structures (database, tables, data, stored procedures, security, ect.)

A given physical server can host or have installed multiple instances of SQL Server of either the same or different versions. (Although certain components are shared between them but more on that later).

Often you will have multiple instances of SQL Server installed either on the same machine or on different machines supporting the same connected application(s) to be used for Production (PROD), Quality Assurance (QA), Testing (TEST), User Acceptance Testing (UAT), and/or Development (DEV).

SQL Server Services

SQL Server components are executable programs that run as a Windows service. Programs that run as a Windows service can continue to run even without a user actively logged in and without displaying a window or other activity in the GUI.

SQL Server Service

The executable process that is the SQL Server Database Engine. The Database Engine can be the default instance (limit one per computer) or can be one of many named instances of the Database Engine. Use SQL Server Configuration Manager to determine which instances of the Database Engine are installed on the computer. The default instance (if you install it) is listed as SQL Server (MSSQLSERVER). Named instances (if you install them) are listed as SQL Server (<instance_name>). By default, SQL Server Express is installed as SQL Server (SQLEXPRESS).

SQL Server Agent

This is a job scheduling service. It connects to the SQL Server instance and executes jobs defined by the user either on demand, on startup or by a user defined schedule. This service is not strictly necessary for SQL Server operation and is actually not included in the Express Edition of SQL Server.

SQL Server Browser

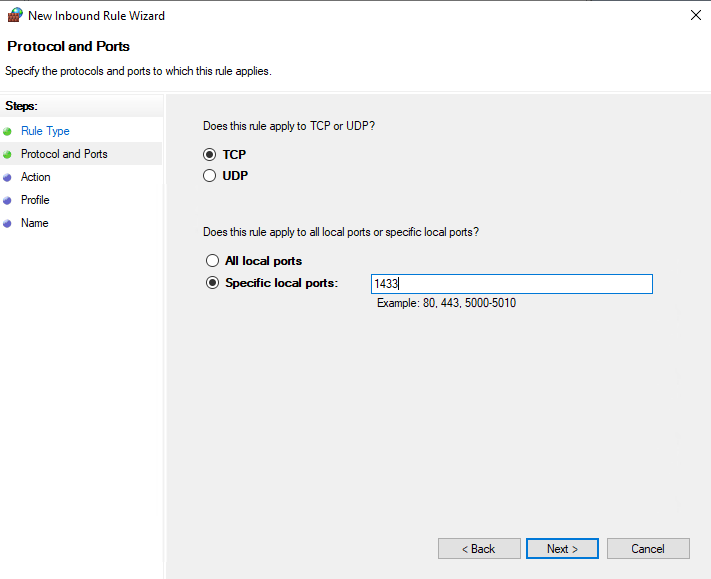

Shared and used by all installed instances of SQL Server on a given machine. It is responsible for responding to incoming requests with client information for connecting to SQL Server. This service can be disabled if the only instance in use is the default instance (MSSQLSERVER) and it is running on the default static port (TCP 1433).

Host/Box/Physical Machine/Bare Metal

The hardware platform (i.e. server) with the virtualization software to support one or many Guest virtual machines. The host may also have SQL Server installed directly on it without any virtualization.

Virtual Machine (VM)

A virtual environment that functions as a virtual computer system with its own CPU, memory, network interface, and storage, simulated on physical hardware (host).

Hyper Visor/ Virtual Machine Monitor (VMM)

The software application running on the host that is responsible for managing one or more virtual machines.

Database

The logical container for stored data. It encapsulates all the other data structures.

System Databases

| System database | Description |

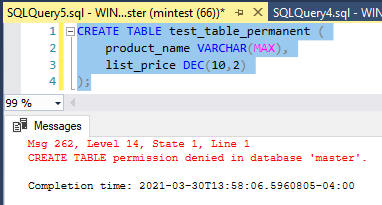

| master Database | Records all the system-level information for an instance of SQL Server. |

| msdb Database | Is used by SQL Server Agent for scheduling alerts and jobs. |

| model Database | Is used as the template for all databases created on the instance of SQL Server. Modifications made to the model database, such as database size, collation, recovery model, and other database options, are applied to any databases created afterward. |

| Resource Database | Is a read-only database that contains system objects that are included with SQL Server. System objects are physically persisted in the Resource database, but they logically appear in the sys schema of every database. |

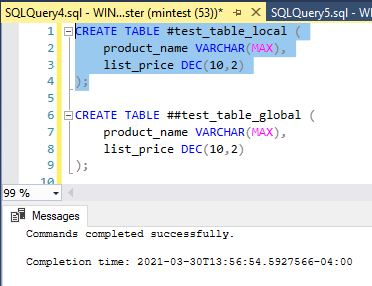

| tempdb Database | Is a workspace for holding temporary objects or intermediate result sets. |

User Databases

All other databases created on a given instance of SQL Server. Each of which has a unique name and an owner. The User Defined database has one or more schemas and each schema has tables, indexes, stored procedures, views, functions and more.

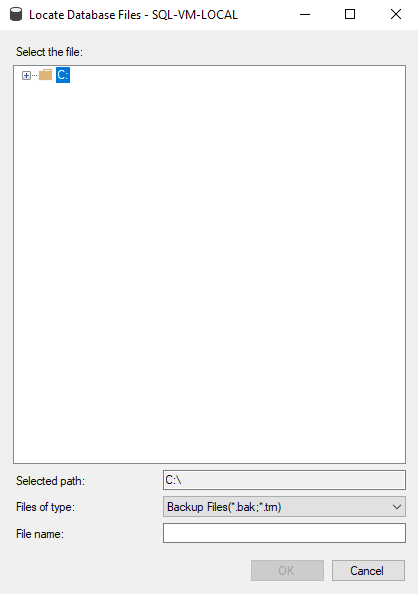

Database Files

SQL Server databases have three types of files, as shown in the following table.

| File | Description |

| Primary | Contains startup information for the database and points to the other files in the database. Every database has one primary data file. The recommended file name extension for primary data files is .MDF. |

| Secondary | Optional user-defined data files. Data can be spread across multiple disks by putting each file on a different disk drive. The recommended file name extension for secondary data files is .NDF. |

| Transaction Log | The log holds information used to recover the database. There must be at least one log file for each database. The recommended file name extension for transaction logs is .LDF. |

Schema

A schema is a logical container within a database to grant permissions to particular objects. By default, each SQL Server database has a dbo and sys schema. Although user defined objects can be created in the dbo schema, it is generally recommended to create a separate schema for each logical application such as sales, inventory, accounting, etc.

Table

Tables are database objects that contain all the data in a database. In tables, data is logically organized in a row-and-column format similar to a spreadsheet. In fact, a spreadsheet can be thought of as a “flat” database whereas SQL Server is a “relational database” because it is modeled around the relationships between tables.

A table is a collection of zero or more rows where each row is a sequence of one or more column values.

Row

The row is the smallest unit of data that can be inserted into a table and deleted from a table.

Cardinality

The degree of a table, and the degree of each of its rows, is the number of columns of that table. The number of rows in a table is its cardinality. A table whose cardinality is 0 (zero) is said to be empty.

Transactional Structured Query Language (T-SQL)

A set of programming extensions from Sybase and Microsoft that add several features to the Structured Query Language (SQL), including transaction control, exception and error handling, row processing and declared variables.

Constraints

Constraints are the rules enforced on the data columns of a table. These are used to limit the type of data that can go into a table.

Referential Integrity

Although the main purpose of a foreign key constraint is to control the data that can be stored in the foreign key table, it also controls changes to data in the primary key table. To successfully change or delete a row in a foreign key constraint, you must first either delete the foreign key data in the foreign key table or change the foreign key data in the foreign key table, which links the foreign key to different primary key data.

Primary Key (PK)

A table typically has a column or combination of columns that contain values that uniquely identify each row in the table. This column, or columns, is called the primary key (PK) of the table and enforces the entity integrity of the table.

Foreign Key (FK)

A foreign key (FK) is a column or combination of columns that is used to establish and enforce a link between the data in two tables to control the data that can be stored in the foreign key table. In a foreign key reference, a link is created between two tables when the column or columns that hold the primary key value for one table are referenced by the column or columns in another table. This column becomes a foreign key in the second table.

Indexes (IX)

An index is an on-disk structure associated with a table or view that speeds retrieval of rows from the table or view. An index contains keys built from one or more columns in the table or view. These keys are stored in a structure (B-tree) that enables SQL Server to find the row or rows associated with the key values quickly and efficiently.

Heap

A heap is a table without a clustered index. One or more nonclustered indexes can be created on tables stored as a heap. Data is stored in the heap without specifying an order. Usually data is initially stored in the order in which is the rows are inserted into the table, but the Database Engine can move data around in the heap to store the rows efficiently; so the data order cannot be predicted.

Clustered Index (CI)

A clustered index sorts and stores the data rows of the table or view in order based on the clustered index key. The clustered index is implemented as a B-tree index structure that supports fast retrieval of the rows, based on their clustered index key values.

Non Clustered Index (NCI)

A nonclustered index can be defined on a table or view with a clustered index or on a heap. Each index row in the nonclustered index contains the nonclustered key value and a row locator. This locator points to the data row in the clustered index or heap having the key value. The rows in the index are stored in the order of the index key values, but the data rows are not guaranteed to be in any particular order unless a clustered index is created on the table.

Query

A query is a question or inquiry about a set of data. In SQL Server we use T-SQL to retrieve meaningful and relevant information from databases. When building a structure, we pull data from tables and fields. The fields are columns in the database table, while the actual data makes up the rows.

Store Procedure (SP)

A stored procedure in SQL Server is an executable object that consists of a group of one or more Transact-SQL statements or a reference to a Microsoft .NET Framework common runtime language (CLR) method. They can accept input parameters, call other stored procedures, and return success/failure messages.

Common Language Runtime (CLR)

With the CLR hosted in Microsoft SQL Server (called CLR integration), you can author stored procedures, triggers, user-defined functions, user-defined types, and user-defined aggregates in managed code. Because managed code compiles to native code prior to execution, you can achieve significant performance increases in some scenarios.

Ad Hoc Query

Ad hoc is Latin for “for this purpose”. Sometimes ad hoc queries may be informally referred to as “on the fly” because unlike stored procedures they are not predefined. Queries such as SELECT * FROM sys.databases is an example of an Ad hoc query that you may issue from SSMS. However, they can also be dynamically generated by code on the application side and executed against SQL Server.

Data Manipulation Language (DML)

These are statements used to retrieve and work with data. Microsoft SQL Server uses the following DML statements:

| BULK INSERT | SELECT |

| DELETE | UPDATE |

| INSERT | UPDATETEXT |

| MERGE | WRITETEXT |

| READTEXT |

Data Definition Language (DDL)

These are statements that define data structures. Use these statements to create, alter, or drop data structures in a database. Microsoft SQL Server uses the following DDL statements:

| ALTER | Collations |

| CREATE | DROP |

| DISABLE TRIGGER | ENABLE TRIGGER |

| RENAME | UPDATE STATISTICS |

| TRUNCATE TABLE |

Data Control Language (DCL)

These statements are used for access control and permission management for users in the database. Use these statements to allow or deny some actions for users on the tables or records. Microsoft SQL Server uses the following DCL statements:

| GRANT | REVOKE |

| DENY |

Transactional Control Language (TCL)

These statements are used for managing transactions in the database. Use these to manage the transactions that are performed by DML statements. Microsoft SQL Server uses the following TCL statements:

| BEGIN TRAN | COMMIT TRAN |

| ROLLBACK | SAVEPOINT |

| AUTOCOMMIT |

Function

A stored program that you can pass parameters into and return a value, much the same as in any programming language.

View

A view is a virtual table whose contents are defined by a query. Like a table, a view consists of a set of named columns and rows of data. You can think of it as instructions for how to piece together two tables temporarily. If not indexed, the view does not persist as stored data (i.e. will be discarded then generated again the next time it is used)

Triggers

A mechanism which fires a particular stored procedure in response to an event.

Logon Triggers

Logon triggers fire stored procedures in response to a LOGON event. This event is raised when a user session is established with an instance of SQL Server.

DDL Trigger

DDL triggers fire in response to a variety of Data Definition Language (DDL) events. These events primarily correspond to Transact-SQL statements that start with the keywords CREATE, ALTER, DROP, GRANT, DENY, REVOKE or UPDATE STATISTICS.

DML Trigger

DML triggers is a special type of stored procedure that automatically takes effect when a data manipulation language (DML) event takes place that affects the table or view defined in the trigger. DML events include INSERT, UPDATE, or DELETE statements. DML triggers can be used to enforce business rules and data integrity, query other tables, and include complex Transact-SQL statements.

Tools

Below are some of the tools that are commonly used when interacting with Microsoft SQL Server.

SQL Server Management Studio (SSMS)

Manage a SQL Server instance or database with full GUI support. Access, configure, manage, administer, and develop all components of SQL Server, Azure SQL Database, and Azure Synapse Analytics. Provides a single comprehensive utility that combines a broad group of graphical tools with a number of rich script editors to provide access to SQL for developers and database administrators of all skill levels.

Azure Data Studio

A light-weight editor that can run on-demand SQL queries, view and save results as text, JSON, or Excel. Edit data, organize your favorite database connections, and browse database objects in a familiar object browsing experience.

SQL Server Data Tools (SSDT)

A modern development tool for building SQL Server relational databases, Azure SQL databases, Analysis Services (AS) data models, Integration Services (IS) packages, and Reporting Services (RS) reports. With SSDT, you can design and deploy any SQL Server content type with the same ease as you would develop an application in Visual Studio.

SQLCMD

A utility lets you enter Transact-SQL statements, system procedures, and script files at the command prompt.

SQL Server Powershell

Provides cmdlets for working with SQL.

SQL Server Configuration Manager

Use SQL Server Configuration Manager to configure SQL Server services and configure network connectivity. Configuration Manager runs on Windows.

NOTE: See here for a more comprehensive and current list of Microsoft SQL Server first party tools.

Summary

This introduction to the series is by no means meant to be a comprehensive overview of the SQL Server product, but more of primer to familiarize you with some of the concepts and terminology before we dive deeper. In the next post we will introduce the Transactional SQL (T-SQL) language and the anatomy of transactions.